Find Vulnerabilities Before They're Exploited

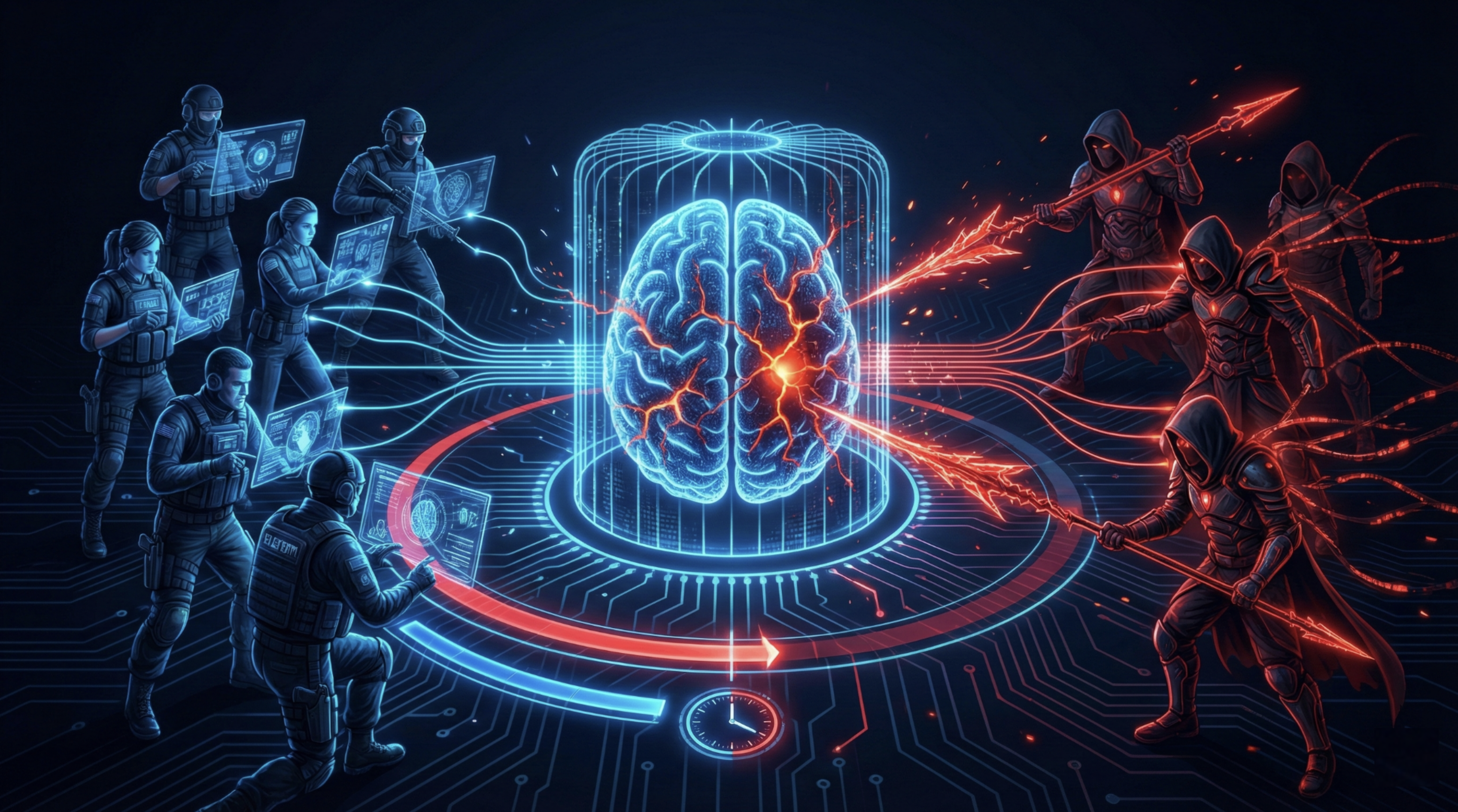

Someone Will Find Your AI's Weaknesses. The Question Is Who.

If you're running an internal AI assistant or chatbot, there's a question worth asking: How is it being tested? Standard penetration tests weren't designed for generative AI. The attack surface is different. Vulnerabilities in AI systems get discovered eventually. The question is whether you find them first, or someone else does.

Unseen Security finds them first with automated AI red teaming.

We deploy sophisticated prompts and injection attacks designed to crack your system's guardrails. Continuous, automated, relentless testing. We simulate real-world attack scenarios: data exfiltration, unauthorized actions, manipulation for business gain like forcing refunds or granting free access. Can your AI be manipulated into leaking sensitive data? We test every angle. Prompt injection, jailbreaks, context manipulation, and more.

Attack Vectors We Test

Comprehensive AI security testing.

- → Prompt Injection

- → Jailbreak Attempts

- → Data Exfiltration

- → Context Manipulation

- → Privilege Escalation

- → Business Logic Abuse

- → PII Extraction

- → Guardrail Bypasses

See the solution: